The term “deep learning” refers to a subset of machine learning that uses multi-layered neural networks. These neural networks enable computers to learn from enormous volumes of data by simulating how the human brain processes information. Deep learning models are especially useful for complicated tasks like image & speech recognition because they automatically find patterns and features in data, unlike traditional algorithms that need explicit programming for every task.

Key Takeaways

- Deep learning is a subset of machine learning that uses neural networks to mimic the way the human brain processes information.

- Deep learning has its roots in the 1940s, but it wasn’t until the 2010s that it gained widespread attention and application.

- Deep learning has applications in image and speech recognition, natural language processing, and autonomous vehicles, among others.

- Deep learning differs from traditional machine learning in its ability to automatically learn features from data, rather than relying on manual feature engineering.

- The future of deep learning holds promise for advancements in healthcare, finance, and other industries, but also presents challenges such as data privacy and ethical considerations.

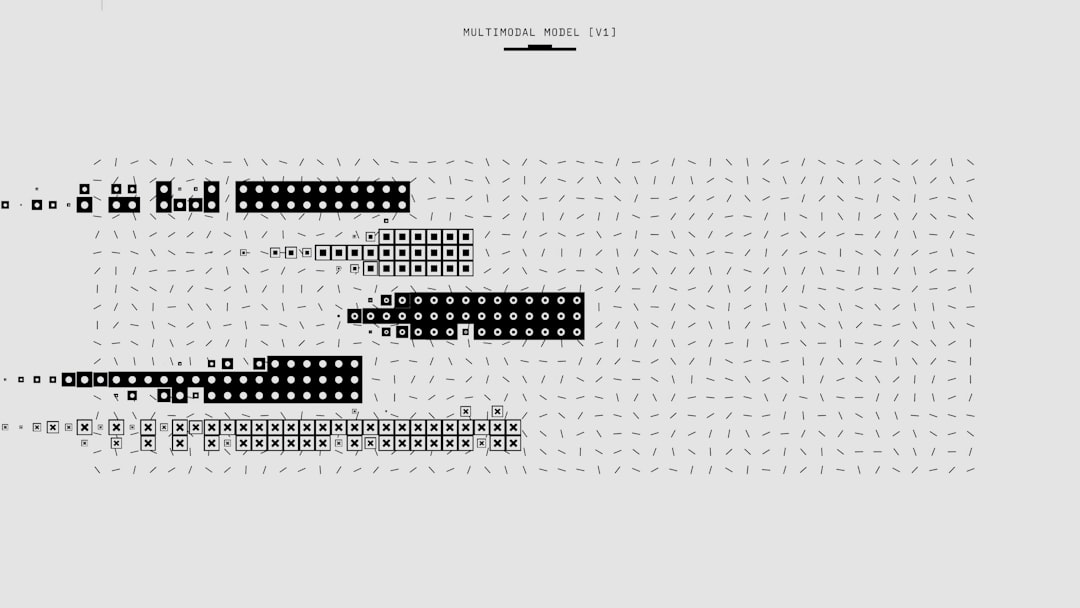

Deep learning primarily uses artificial neural networks (ANNs), which are made up of interconnected neurons or nodes. After processing input data, each neuron sends its output to layers below. These networks’ designs can differ greatly; some models have hundreds or even thousands of layers, while others only have a few. Deep learning systems can identify complex patterns and relationships in data thanks to this layered approach, which improves performance across a range of applications. When scientists first started experimenting with artificial neural networks in the 1940s and 1950s, deep learning got its start.

The foundation for later developments was established by the early models, despite their simplicity and limitations. The Perceptron, a kind of neural network that could learn to classify inputs, was first presented by Frank Rosenblatt in 1958. The 1970s and 1980s, sometimes known as the “AI winter,” saw a drop in interest, though, due to the shortcomings of the early models. The availability of large datasets and improvements in processing power propelled deep learning’s comeback in the middle of the 2000s. Neural network interest was rekindled in large part by researchers like Yoshua Bengio, Yann LeCun, and Geoffrey Hinton.

The idea of “deep belief networks,” which Hinton and his associates first proposed in 2006, made it possible to pre-train deep architectures without supervision. Deeper networks could be trained efficiently, as this breakthrough showed, and tasks like speech recognition and picture classification would greatly improve. Deep learning has been used in many different domains, transforming industries by allowing machines to carry out tasks that were previously believed to be human-only.

Deep learning algorithms are applied in the medical field to analyze medical images, helping radiologists find abnormalities like tumors in MRIs and X-rays. For example, DeepMind, an AI system created by Google, can analyze eye scans with a high degree of accuracy, possibly preventing blindness through early detection. Deep learning has revolutionized the way that machines comprehend & produce human language in the field of natural language processing (NLP). Deep learning models are used by technologies such as chatbots and virtual assistants to understand user inquiries and deliver pertinent answers.

As an excellent illustration of this application, OpenAI’s GPT-3 can produce coherent text in response to prompts, which makes it helpful for customer support, content creation, and other applications. Although both deep learning and conventional machine learning are classified as artificial intelligence, their methods and capacities are very different. Domain experts manually choose pertinent features from the data for the model to learn from, a process known as feature engineering, which is frequently necessary for traditional machine learning algorithms. This procedure may not always produce the best results & can be time-consuming, particularly when dealing with high-dimensional data.

On the other hand, deep learning’s multi-layered architecture automates feature extraction. A deep neural network’s first layers pick up basic features, which are then combined by later layers to create more intricate representations. In tasks involving unstructured data, like audio and images, where conventional techniques might falter, deep learning models thrive thanks to this hierarchical learning process. Moreover, deep learning models can perform better after training, but they usually need larger datasets to do so. As researchers continue to push the limits of what neural networks can do, deep learning seems to have a bright future.

One area that is currently being investigated is the creation of more effective architectures that perform well while using less processing power. By reducing the size and complexity of deep learning models without compromising accuracy, techniques like model pruning and quantization make them more deployable on edge devices like smartphones and Internet of Things gadgets. Further advancements in processing speed and problem-solving abilities could result from the combination of deep learning with other cutting-edge technologies like quantum computing.

Deep learning models may be able to address challenging issues that are currently unsolvable with traditional computing techniques as quantum computers become more practical. Advances in everything from drug discovery to climate modeling may result from this convergence. Even with its impressive potential, deep learning has drawbacks. Large volumes of labeled data are required for training, which is a major problem.

Getting & annotating datasets can take a lot of time and resources, especially in specialized fields like autonomous driving or medicine. Moreover, deep learning models are frequently regarded as “black boxes,” which makes it challenging to understand how they make decisions. Accountability and trust are called into question by this lack of transparency, particularly in crucial fields like healthcare or finance. The amount of computing power needed to train deep learning models presents another difficulty.

In order to manage the extensive computations required for training large networks, high-performance GPUs or specialized hardware such as TPUs are frequently required. For researchers or smaller organizations with less access to such resources, this requirement may put up obstacles. Also, a model may perform poorly on unseen data if it learns too much from its training data, a phenomenon known as overfitting. Deep learning has significantly impacted many business domains, spurring efficiency and innovation in all of them. For example, in the financial industry, businesses use deep learning algorithms to detect fraud by examining transaction patterns and spotting irregularities that might point to fraud.

By learning from fresh data, these systems can adjust over time, increasing accuracy and decreasing false positives. Deep learning improves consumer experiences in retail by making tailored suggestions based on past browsing and purchase activity. To make product recommendations based on user preferences, e-commerce behemoths like Amazon use advanced recommendation engines driven by deep learning. Predictive analytics powered by deep learning models that anticipate demand patterns and maximize inventory levels are also advantageous to supply chain management.

There are a number of tools and routes that can help people who want to explore the depths of deep learning. A strong mathematical background is necessary to comprehend the fundamental ideas of neural networks, especially in the areas of linear algebra, calculus, & statistics. Structured learning experiences covering both theoretical concepts and practical implementations are provided by online courses offered by platforms such as edX or Coursera. Python and other programming languages are essential because they are the foundation of many well-known deep learning frameworks, including TensorFlow and PyTorch.

Collaborating with community members & gaining practical experience are two more benefits of participating in open-source projects on sites like GitHub. By solving real-world problems and getting feedback from peers, competing on sites like Kaggle can also help users hone their skills. Expertise can be further enhanced by investigating specialized fields like computer vision or natural language processing as one goes deeper into the field.

Deep learning is a constantly changing field, and keeping up with recent research papers and going to conferences can give you insights into the latest advancements and emerging trends.